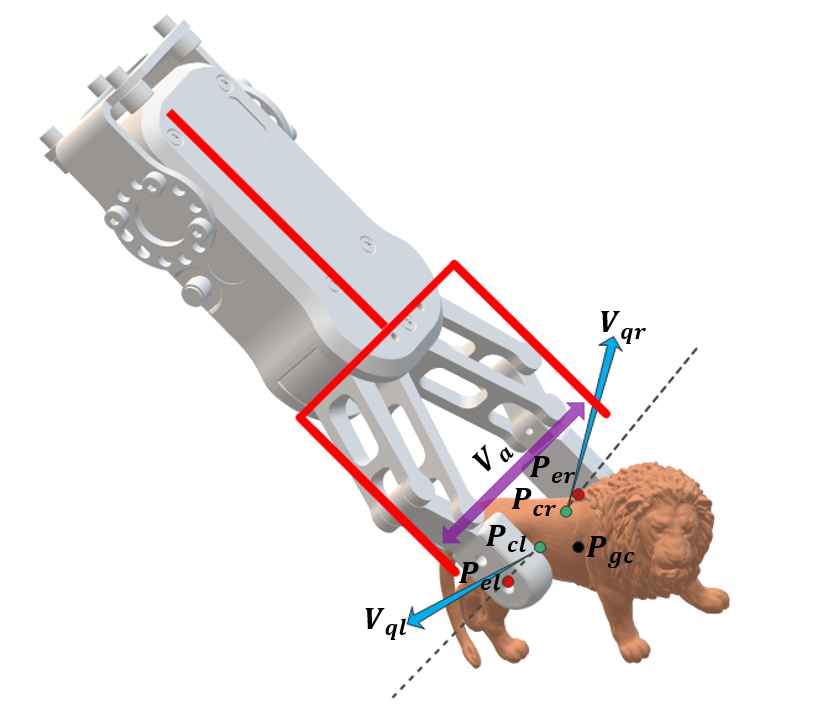

- Feb 2022: FGC_GraspNet has been accepted to ICRA 2022.

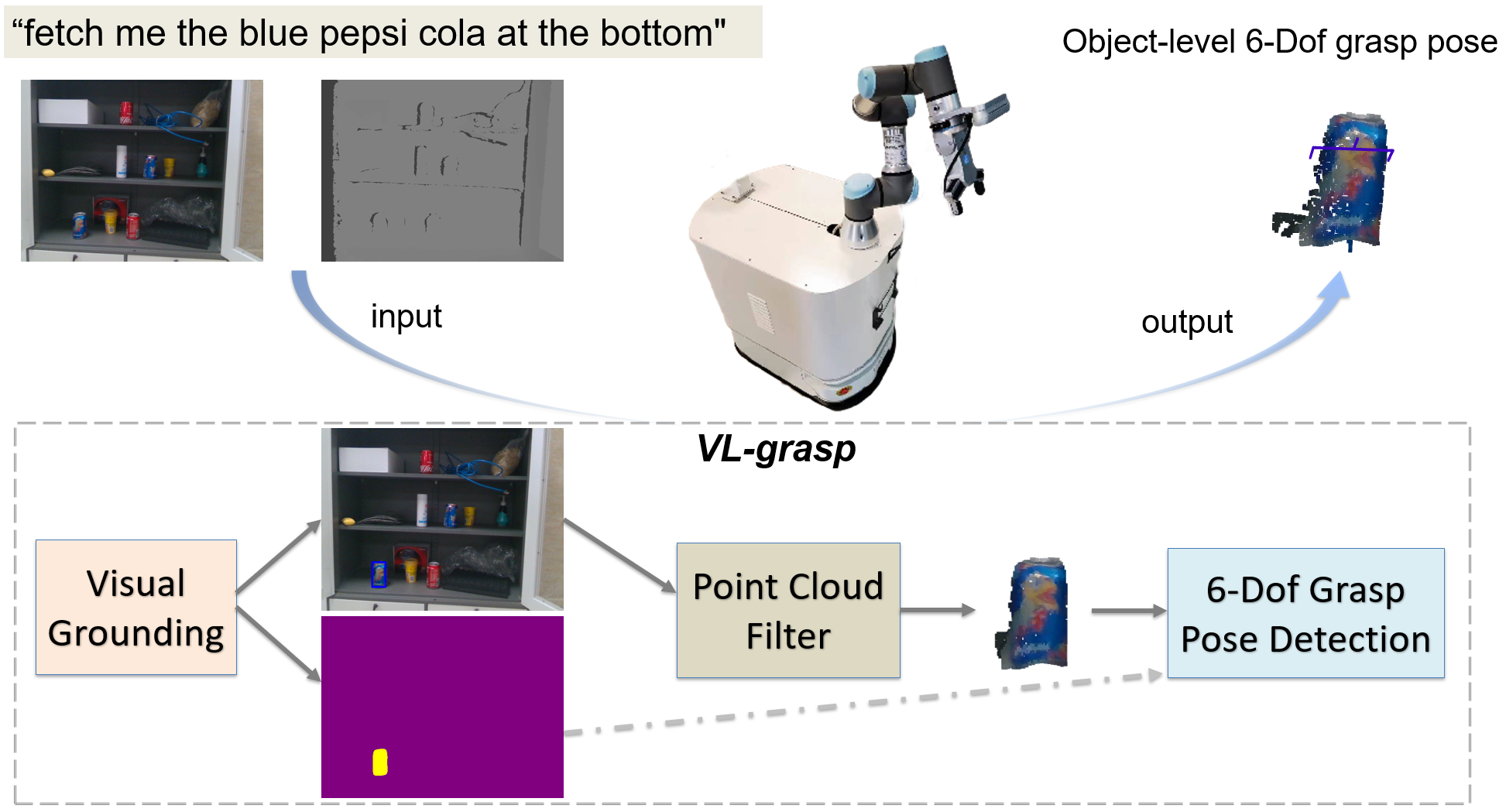

- Jun 2022: We built a Visual Grounding dataset for robotics, RoboRefIt.

- Mar 2023: I led the CV-AI team to participate in 2023 Intel Indoor Robot Learning Grand Challenge in Shanghai China, and won the FRIST PRIZE in the Recognition Track (Few-shot detection task) and the SECOND PRIZE in the Manipulation Track (Grasping task and Feeding task).

- Jun 2023: I received the honor of 2023 Outstanding Master’s Graduate Student from Tsinghua University (top 2%). I also received the honor of 2023 Outstanding Master’s Thesis from Tsinghua University (top 5%).

- Jun 2023: VL_Grasp has been accepted to IROS 2023.

Hello, I'm Yuhao Lu

I recieved my master degree from Department of Electronic Engineering, Tsinghua University, advised by Shengjin Wang, and I work on robotics and computer vision.